Oxford University this week shut down an academic institute run by one of Elon Musk’s favorite philosophers. The Future of Humanity Institute, dedicated to the long-termism movement and other Silicon Valley-endorsed ideas such as effective altruism, closed this week after 19 years of operation. Musk had donated £1m to the FIH in 2015 through a sister organization to research the threat of artificial intelligence. He had also boosted the ideas of its leader for nearly a decade on X, formerly Twitter.

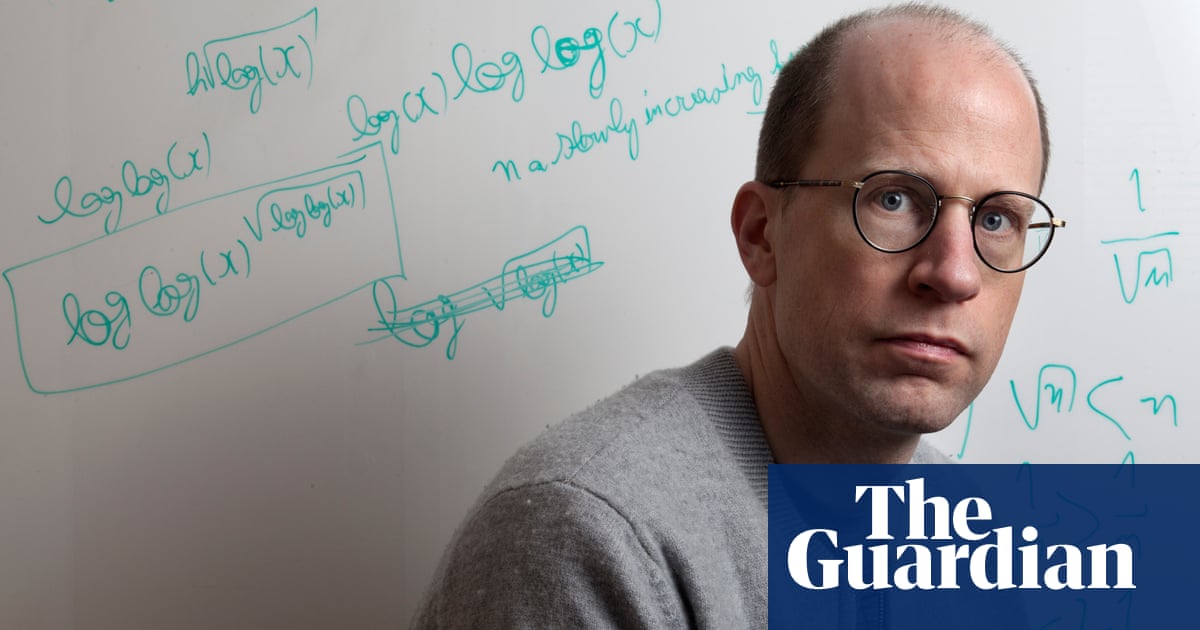

The center was run by Nick Bostrom, a Swedish-born philosopher whose writings about the long-term threat of AI replacing humanity turned him into a celebrity figure among the tech elite and routinely landed him on lists of top global thinkers. OpenAI chief executive Sam Altman, Microsoft founder Bill Gates and Tesla chief Musk all wrote blurbs for his 2014 bestselling book Superintelligence.

…

Bostrom resigned from Oxford following the institute’s closure, he told the Guardian.

The closure of Bostrom’s center is a further blow to the effective altruism and longtermism movements that the philosopher has spent decades championing, which in recent years have become mired in scandals related to racism, sexual harassment and financial fraud. Bostrom himself issued an apology last year after a decades-old email surfaced in which he claimed “Blacks are more stupid than whites” and used the N-word.

…

Effective altruism, the utilitarian belief that people should focus their lives and resources on maximizing the amount of global good they can do, has become a heavily promoted philosophy in recent years. The philosophers at the center of it, such as Oxford professor William MacAskill, also became the subject of immense amounts of news coverage and glossy magazine profiles. One of the movement’s biggest backers was Sam Bankman-Fried, the now-disgraced former billionaire who founded the FTX cryptocurrency exchange.

Bostrom is a proponent of the related longtermism movement, which held that humanity should concern itself mostly with long term existential threats to its existence such as AI and space travel. Critics of longtermism tend to argue that the movement applies an extreme calculus to the world that disregards tangible current problems, such as climate change and poverty, and veers into authoritarian ideas. In one paper, Bostrom proposed the concept of a universally worn “freedom tag” that would constantly surveil individuals using AI and relate any suspicious activity to a police force that could arrest them for threatening humanity.

…

The past few years have been tumultuous for effective altruism, however, as Bankman-Fried’s multibillion-dollar fraud marred the movement and spurred accusations that its leaders ignored warnings about his conduct. Concerns over effective altruism being used to whitewash the reputation of Bankman-Fried, and questions over what good effective altruist organizations are actually doing, proliferated in the years since his downfall.

Meanwhile, Bostrom’s email from the 1990s resurfaced last year and resulted in him issuing a statement repudiating his racist remarks and clarifying his views on subjects such as eugenics. Some of his answers – “Do I support eugenics? No, not as the term is commonly understood” – led to further criticism from fellow academics that he was being evasive.

As soon as the term ‘effective altruism’ is rolled out, I know exactly what kinda cunt the person is

It definitely feels like Oxford got ahead of the scandals coming down the pipeline by freezing funding in 2020.

Alright I’ll take the bait, what kinda cunt am I? 😅 It’s just an idea that some bad actors (like Sam Bankman Fried) have run amok with, doesn’t discredit the idea itself.

What’s wrong with applying your skillset in the free market and then donating a percentage of your salaries to vetted, highly effective charities in lieu of directly working for those same or other charities where your skillset may not be as helpful as straight up cash?

In my view it’s simply a more empirical approach to donating your money to worthwhile causes, sure as shit does more good than the status quo where most affluent people just hoard their money and avoid paying taxes as best they can. I think of it as an opt in tax where a certain percentage of one’s income can be earmarked towards doing the optimal amount of good it can in the world current and future. For a long time one of the most effective charities according to give well has been sourcing malaria nets in Africa, not really the far futurism this article is criticising.

I know it’s a stupid, petty thing to get hung up on, but I’m weirdly offended by the word “Center” being used instead of “Centre”, in a British article/newspaper for a British Institute at a British University.

It’s the end of civilisation, I tell you.

It’s outrageous! I shall write a strongly worded letter at once!

Ai written ;)

Wow I didn’t know effective altruism was so bad. When someone explained me the basic idea some years ago, it sounded quite sensible. Along the lines of: “if you give a man a fish you will feed him for a day. If you teach a man how to fish, you will feed him for a lifetime.”

Like many things it’s a reasonable idea - I still think it’s a reasonable idea. It just tends to get adopted and warped by arseholes

It does on the surface, but the problem is it quickly devolves into extreme utilitarianism. There are some other issues such as:

- How rich is rich enough for EA? Who decides?

- How much suffering is it acceptable to cause while getting rich to deliver EA?

- Is it better to deal with the problems we have now and in the immediate future, or avoid problems in millions of years that we may or may not accurately be able to predict?

- Can you even apply morality and ethics to people that do not exist?

- Who decides what has the most value?

- It’s a cult.

The underlying idea is reasonable. But it was adopted by assholes and warped into something altogether different.

log sqrt(log(x))? No this can’t be right furious striking off Maybe log(x)log(log(x))? No no no, maybeee log(x)^sqrt(log(x)) - xlog(x)… Yes! I bet this is the formula for the best humans!

This is the best summary I could come up with:

The center was run by Nick Bostrom, a Swedish-born philosopher whose writings about the long-term threat of AI replacing humanity turned him into a celebrity figure among the tech elite and routinely landed him on lists of top global thinkers.

The closure of Bostrom’s center is a further blow to the effective altruism and longtermism movements that the philosopher has spent decades championing, which in recent years have become mired in scandals related to racism, sexual harassment and financial fraud.

A statement on the Future of Humanity’s website claimed that Oxford froze fundraising and hiring in 2020, and in late 2023 the faculty of philosophy decided to not renew the contracts of remaining staff at the institute.

Critics of longtermism tend to argue that the movement applies an extreme calculus to the world that disregards tangible current problems, such as climate change and poverty, and veers into authoritarian ideas.

In one paper, Bostrom proposed the concept of a universally worn “freedom tag” that would constantly surveil individuals using AI and relate any suspicious activity to a police force that could arrest them for threatening humanity.

“We unequivocally condemn Nick Bostrom’s recklessly flawed and reprehensible words,” the Centre for Effective Altruism, which was founded by fellow Oxford philosophers and financially backed by Bankman-Fried, said in a statement at the time.

The original article contains 869 words, the summary contains 219 words. Saved 75%. I’m a bot and I’m open source!

Unrelated. But what makes you guys trust sources like Guardian so much? Ever since they spread toxic misinformation,or at the very best, guided peasimistic truth without context about Pewdiepie back in 2017 they pretty much just lost me.

I feel like I have had a significant amount of experiences that has seen most of these more well established news outlets dealing in misinformation.

They were probably the truth back in the day. But I don’t feel that any more.

I’m not really sure what toxic misinformation about Pewdiepie they spread (got a source?) but The Guardian is a left-leaning newspaper that does top notch investigative journalism (when we have very few of either of those in the UK). However, as with every source, you have to understand their inherent biases and read around a subject to get a more balanced view of any story you are interested in. So my major concern with The Guardian is they got a reputation for running TERF articles. I believe they have rectified this to some extent but it’s something I keep an eye out for. It is still one of the best newspapers in the country and, in regards to this article, I can’t really fault their coverage as the TREACLES have been a cause for concern for a while - this article may even be too restrained but there are other outlets really chasing this issue. See: !sneerclub@awful.systems.

I appreciate your elaboration.

As for a source, here is one I had in mind: Guardian link

My reasoning is that I feel they took it out of context and made it so that to new eyes it could seem like he possibly actually supported antisemitism. While in reality, anybody watching the original context with a head on their shoulders would know he made the people write the worst thing that came to mind on the spot for a joke. Funny or not not doesn’t really matter, as they made him seem like there was a possibility he was the exact opposite of what he really is.

I don’t see that as “spread toxic misinformation”, the video is tied to an article reporting that YouTube and Disney because of the content of his video based on an investigation by the Wall Street Journal.

The videos did not directly promote Nazi ideology, instead using the imagery and phrasing of fascism for its shock value alone.

That seems like pretty standard reporting to me, unless I am missing something.